I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.

― Abraham H. Maslow

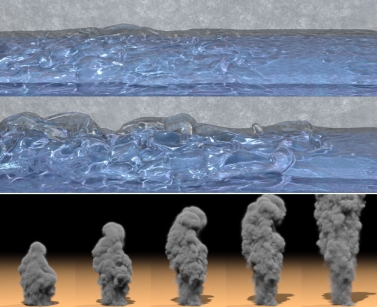

Nothing stokes the imagination for the power of computing to shape scientific discovery like direct numerical simulation (DNS). Imagine using the magic of the computer to unveil the secrets of the universe. We simply solve the mathematical equations that describe nature accurately at immense precision, and magically truth comes out the other end. DNS also stokes the demand for computing power, the bigger the  computer, the better the science and discovery. As an added bonus the visualizations of the results are stunning almost Hollywood-quality and special effect appealing. It provides the perfect sales pitch for the acquisition of the new supercomputer and everything that goes with it. With a faster computer, we can just turn it loose and let the understanding flow like water bursting through a dam. With the power of DNS, the secrets of the universe will simply submit to our mastery!

computer, the better the science and discovery. As an added bonus the visualizations of the results are stunning almost Hollywood-quality and special effect appealing. It provides the perfect sales pitch for the acquisition of the new supercomputer and everything that goes with it. With a faster computer, we can just turn it loose and let the understanding flow like water bursting through a dam. With the power of DNS, the secrets of the universe will simply submit to our mastery!

If science were only that easy. It is not and this sort of thing is a marketing illusion for the naïve and foolish.

The saddest thing about DNS is the tendency for scientist’s brains to almost audibly click into the off position when its invoked. All one has to say is that their calculation is a DNS and almost any question or doubt leaves the room. No need to look deeper, or think about the results, we are solving the fundamental laws of physics with stunning accuracy! It must be right! They will assert, “this is a first principles” calculation, and predictive at that. Simply marvel at the truths waiting to be unveiled in the sea of bits. Add a bit of machine learning, or artificial intelligence to navigate the massive dataset produced by DNS, (the datasets are so fucking massive, they must have something good! Right?) and you have the recipe for the perfect bullshit sandwich. How dare some infidel cast doubt, or uncertainty on the results! Current DNS practice is a religion within the scientific community, and brings an intellectual rot into the core computational science. DNS reflects some of the worst wishful thinking in the field where the desire for truth, and understanding overwhelms good sense. A more damning assessment would be a tendency to submit to intellectual laziness when pressed by expediency, or difficulty in progress.

The saddest thing about DNS is the tendency for scientist’s brains to almost audibly click into the off position when its invoked. All one has to say is that their calculation is a DNS and almost any question or doubt leaves the room. No need to look deeper, or think about the results, we are solving the fundamental laws of physics with stunning accuracy! It must be right! They will assert, “this is a first principles” calculation, and predictive at that. Simply marvel at the truths waiting to be unveiled in the sea of bits. Add a bit of machine learning, or artificial intelligence to navigate the massive dataset produced by DNS, (the datasets are so fucking massive, they must have something good! Right?) and you have the recipe for the perfect bullshit sandwich. How dare some infidel cast doubt, or uncertainty on the results! Current DNS practice is a religion within the scientific community, and brings an intellectual rot into the core computational science. DNS reflects some of the worst wishful thinking in the field where the desire for truth, and understanding overwhelms good sense. A more damning assessment would be a tendency to submit to intellectual laziness when pressed by expediency, or difficulty in progress.

Let’s unpack this issue a bit and get to the core of the problems. First, I will submit that DNS is an unambiguously valuable scientific tool. A large body of work valuable to a broad swath of science can benefit from DNS. We can study our understanding of the universe in myriad ways at phenomenal detail. On the other hand, DNS is not ever a substitute for observations. We do not know the fundamental laws of the universe with such certainty that the solutions provide an absolute truth. The laws we know are models plain and simple. They will always be models. As models, they are approximate and incomplete by their basic nature. This is how science works, we have a theory that explains the universe, and we test that theory (i.e., model) against what we observe. If the model produces the observations with high precision, the model is confirmed. This model confirmation is always tentative and subject to being tested with new or more accurate observations. Solving a model does not replace observations, ever, and some uses of DNS are masking laziness or limitations in observational (experimental) science.

Let’s unpack this issue a bit and get to the core of the problems. First, I will submit that DNS is an unambiguously valuable scientific tool. A large body of work valuable to a broad swath of science can benefit from DNS. We can study our understanding of the universe in myriad ways at phenomenal detail. On the other hand, DNS is not ever a substitute for observations. We do not know the fundamental laws of the universe with such certainty that the solutions provide an absolute truth. The laws we know are models plain and simple. They will always be models. As models, they are approximate and incomplete by their basic nature. This is how science works, we have a theory that explains the universe, and we test that theory (i.e., model) against what we observe. If the model produces the observations with high precision, the model is confirmed. This model confirmation is always tentative and subject to being tested with new or more accurate observations. Solving a model does not replace observations, ever, and some uses of DNS are masking laziness or limitations in observational (experimental) science.

To acquire knowledge, one must study;

but to acquire wisdom, one must observe.

― Marilyn Vos Savant

One place where the issue of DNS comes to a head is validation. In validation, a code (i.e., model) is compared with experimental data for the purposes of assessing the model’s ability to describe nature. In DNS, we assume that nature is so well understood that our model can describe it in detail, the leap too far is saying that the model can replace observing nature. This presumes that the model is completely and totally validated. I find this to be an utterly ludicrous prospect. All models are tentative descriptions of reality, and intrinsically limited in some regard. The George Box maxim immediately comes to mind “all models are wrong”. This is axiomatically true, and being wrong, models cannot be used to validate. With DNS, this is suggested as a course of action violating the core principles of the scientific method for the sake of convenience. We should not allow this practice for the sake of scientific progress. It is anathema to the scientific method.

This does not say that DNS is not useful. DNS can produce scientific results that may be used in a variety of ways where experimental or observational results are not available. This is a way of overcoming a limitation of what we can tease out of nature. Realizing this limitation should always come with the proviso that this is expedient, and used in the absence of observational data. Observational evidence should always be sought and the models should always be subjected to tests of validity. The results come from assuming the model is very good and provides value, but cannot be used to validate the model. DNS is always second best to observation. Turbulence is a core example of this principle, we do not understand turbulence; it is an unsolved problem. DNS as a model has not yielded understanding sufficient to unveil the secrets of the universe. They are still shrouded. Part of the issue is the limitations of the model itself. In turbulence DNS almost always utilizes an unphysical model to describe fluid dynamics with a lack of thermodynamics and infinitely fast acoustic waves. Being unphysical in its fundamental character, how can we possibly consider it a replacement for reality? Yet in a violation of common sense driven by frustration of lack of progress, we do this all the time.

This does not say that DNS is not useful. DNS can produce scientific results that may be used in a variety of ways where experimental or observational results are not available. This is a way of overcoming a limitation of what we can tease out of nature. Realizing this limitation should always come with the proviso that this is expedient, and used in the absence of observational data. Observational evidence should always be sought and the models should always be subjected to tests of validity. The results come from assuming the model is very good and provides value, but cannot be used to validate the model. DNS is always second best to observation. Turbulence is a core example of this principle, we do not understand turbulence; it is an unsolved problem. DNS as a model has not yielded understanding sufficient to unveil the secrets of the universe. They are still shrouded. Part of the issue is the limitations of the model itself. In turbulence DNS almost always utilizes an unphysical model to describe fluid dynamics with a lack of thermodynamics and infinitely fast acoustic waves. Being unphysical in its fundamental character, how can we possibly consider it a replacement for reality? Yet in a violation of common sense driven by frustration of lack of progress, we do this all the time.

One of the worst aspects of the entire DNS enterprise is the tendency to do no assessment of uncertainty with its results. Quite often the results of DNS are delivered without any uncertainty of approximation or the model. Most often no uncertainty at all is included, estimated or even alluded to. The results of DNS are still numerical approximations with approximation error. The models while detailed and accurate are always approximations and idealizations of reality. This aspect of the modeling must be included for the work to be used for high consequence work. If one is going to use DNS as a stand-in for experiment, this is the very least that must be done. The uncertainty assessment should also include the warning that the validation is artificial and not based on reality. If there isn’t an actual observation available to augment the DNS in the validation, the reader should be suspicious, and the smell of bullshit should alert one to deception.

Some of our models are extremely reliable, and have withstood immense scrutiny. These models are typically the subject of DNS. A couple of equations are worth discussing in depth, Schrödinger’s equations for quantum physics, molecular & atomic dynamics and the Navier-Stokes equations for turbulence. These models are frequent topics of DNS investigations, and all of them are not reality. The equations are mathematics and a logical constructive language of science, but not actual reality. These equations are unequal in terms of their closeness to fundamentality, but our judgment should be the same. The closeness to “first principles” should be reflected in the assessment of uncertainty, which also reflects the problem being solved by the DNS. None of these equations will yield truths so fundamental as to not be questioned or free of uncertainty.

When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

― Arthur C. Clarke

Another massive problem with DNS is the general lack of uncertainty assessment. It is extremely uncommon to see any sort of uncertainty assessment accompanying DNS. If we accept the faulty premise that DNS can replace experimental data, the uncertainty associated with these “measurements” must be included. This almost universally shitty practice further undermines the case of using DNS as a replacement for experiment. Of course, we are accepting far too many experimental results without their own error bars these days. Even if we make the false premise that the model being solved DNS is true to the actual fundamental laws, the solution is still  approximate. The approximate solution is never free of numerical error. In DNS, the estimate of the magnitude of approximation error is almost universally lacking from results.

approximate. The approximate solution is never free of numerical error. In DNS, the estimate of the magnitude of approximation error is almost universally lacking from results.

Let’s be clear, even when used properly DNS results must come with an uncertainty assessment. Even when DNS is used simply as a high-fidelity solution of a model, the uncertainty of the solution is needed for assessment of the utility of the results. This utility is ultimately determined by some comparison with observations with phenomena seen in reality. We may use DNS to measure the power of a simpler model to provide consistency with the more fundamental model included in DNS. This sort of utility is widespread in turbulence, material science or constitutive modeling, but credibility of the use must always be determined with experimental data. The observational data always has primacy and DNS should always be subservient to realities results.

Unfortunately, we also need to address an even more deplorable DNS practice. Sometimes people simply declare that their calculation is a DNS without any evidence to support this assertion. Usually this means the calculation is really, really, really, super fucking huge and produces some spectacular graphics with movies and color (rendered in super groovy ways). Sometimes the models being solved are themselves extremely crude or approximate. For example, the Euler equations are being solved with or without turbulence models instead of Navier-Stokes in cases where turbulence is certainly present. This practice is so abominable as to be almost a cartoon of credibility. This is the use of proof by overwhelming force. Claims of DNS should always be taken with a grain of salt. When the claims take the form of marketing they should be met with extreme doubt since it is a form of bullshitting that tarnishes those working to practice scientific integrity.

Unfortunately, we also need to address an even more deplorable DNS practice. Sometimes people simply declare that their calculation is a DNS without any evidence to support this assertion. Usually this means the calculation is really, really, really, super fucking huge and produces some spectacular graphics with movies and color (rendered in super groovy ways). Sometimes the models being solved are themselves extremely crude or approximate. For example, the Euler equations are being solved with or without turbulence models instead of Navier-Stokes in cases where turbulence is certainly present. This practice is so abominable as to be almost a cartoon of credibility. This is the use of proof by overwhelming force. Claims of DNS should always be taken with a grain of salt. When the claims take the form of marketing they should be met with extreme doubt since it is a form of bullshitting that tarnishes those working to practice scientific integrity.

The world is full of magic things, patiently waiting for our senses to grow sharper.

― W.B. Yeats

Part of doing science correctly is honesty about challenges. Progress can be made with careful consideration of the limitations of our current knowledge. Some of these limits are utterly intrinsic. We can observe reality, but various challenges limit the fidelity and certainty of what we can sense. We can model reality, but these models are always approximate. The models encode simplifications and assumptions. Progress is made by putting these two forms of understanding into tension. Do our models predict or reproduce the observations to within their certainty? If so, we need to work on improving the observations until they challenge the models. If not, the models need to be improved, so that the observations are produced. The current use of DNS short-circuits this tension and acts to undermine progress. It wrongly puts modeling in the place of reality, which only works to derail necessary work on improving models, or work to improve observation. As such, poor DNS practices are actually stalling scientific progress.

Part of doing science correctly is honesty about challenges. Progress can be made with careful consideration of the limitations of our current knowledge. Some of these limits are utterly intrinsic. We can observe reality, but various challenges limit the fidelity and certainty of what we can sense. We can model reality, but these models are always approximate. The models encode simplifications and assumptions. Progress is made by putting these two forms of understanding into tension. Do our models predict or reproduce the observations to within their certainty? If so, we need to work on improving the observations until they challenge the models. If not, the models need to be improved, so that the observations are produced. The current use of DNS short-circuits this tension and acts to undermine progress. It wrongly puts modeling in the place of reality, which only works to derail necessary work on improving models, or work to improve observation. As such, poor DNS practices are actually stalling scientific progress.

I believe in evidence. I believe in observation, measurement, and reasoning, confirmed by independent observers. I’ll believe anything, no matter how wild and ridiculous, if there is evidence for it. The wilder and more ridiculous something is, however, the firmer and more solid the evidence will have to be.

― Isaac Asimov

Pingback: 11 Things in Computational Science that Sound Awesome, but are Actually Terrible | The Regularized Singularity

Bill,

I disagree with this sentiment rather strongly. In particular your bold faced declaration that models cannot be used to validate. I assume from your acceptance of (good) DNS as useful, that you would agree that comparison of results produced with lower fidelity models to DNS results is also a useful exercise so I don’t need to defend the practice in general. There is a somewhat philosophical taxonomy question though of what such an exercise actually is. In my opinion, it a validation exercise. I find some of the simplest words on V&V are the most powerful I’ve come across, P.J. Roache’s near poetic quip:

Verification is solving the equations right; Validation is comparing to experimental observations.

Wait… is that right? No. Actually, it was:

Verification is solving the equations right; Validation is solving the right equations.

At its most fundamental level, validation is about determining if the equations we are solving are an accurate representation of the physics they are intended to represent. Sometimes you can make these assessments, at least in part, by comparing to higher-fidelity model representations of the same physics. I ran into similar challenges getting a presentation into a validation session at the 2016 V&V meeting (or maybe that was also you) where we compared continuum models for multiphase particulate flows derived from a kinetic theory (out to navier-stokes order) against DNS data. There are A LOT of assumptions rolled into these models, assumptions about the particle properties (perfectly spherical, exactly monodisperse, frictionless), assumptions about the interstitial fluid (incompressible, constant material properties, no shear or particle induced turbulence), assumptions about the collisions (instantaneous, binary, normal dissipation characterized by a constant coefficient of restitution), and assumptions about the kinetic theory (molecular chaos, near-maxellian distribution, existence of a normal solution and a small Kn # for the perturbation expansion). If I understood the KT better, I’m sure there would be more to list. But, for me, that’s already a ton, and (IMO) taking a model like this and going straight to experimental data is a terrible idea because you’re exercising all of these assumptions simultaneously. If you get bad results, it’s hard to figure out why. Even worse, if you get good results it could easily be the result of two or more assumptions being bad in “opposing directions” and canceling each other out. Validating this kind of model against DNS data FIRST is better b/c it isolates assumptions. I don’t think anyone would attest that it validates the model completely or gives you any confidence in the treatment of particle/collision/fluid assumptions that are also present in the DNS. That’s maybe part of the issue, you and a reviewer or organizer for VV16 (I forget at this point) used the word REPLACE. This isn’t a replacement for validation against experimental data. It can’t be, it doesn’t exercise all of the modelling assumptions. There can be, maybe should be, more than one step in the validation process.

Will

(William D. Fullmer)

ps sorry if this gets posted twice, apparently I already had an account associated with my gmail and by the time I got the password reset and logged in it was gone

Thank you for the thoughtful comment. I might put DNS’s utility in a different place, it is a very high grade form of code comparison. It provides confidence, but not truth. It tests the consistency of a model with the same assumptions going into the DNS, but not the validity of the equations themselves.

\